Introductory Econometrics. Chapter 13

Chapter 13: Pooling Cross Sections across Time: Simple Panel Data Methods

More and more research uses data sets that have both cross sectional and time series dimensions. Such data has some important advantages over purely cross sectional or time series data.

We will consider two types of data sets: independently pooled cross section and panel data.

Independently pooled cross section is obtained by sampling randomly from a large population at different points in time. For example, every year, we get a random sample of workers in a country and ask them about their wages, education and so on. European Social Survey is such survey. In the participating countries, every two years a new sample is surveyed. Such data consists of independently sampled observations. It rules out correlation in the error terms across different observations. With such data, it is important to remember and account for the fact that distributions of wages, education, attitudes and other factors change over time.

A panel (or longitudinal) data set follows the same individuals, firms, cities, countries over time. For example, in Health and Retirement Study (HRS) of older Americans and Survey of Health, Ageing and Retirement in Europe (SHARE) of older Europeans, the same individuals are followed over the years and various data about them is collected. This gives us data on their consumption, income, assets, health and other variables in different years. Panel data is very useful as we are able to make sure that the some of the unobservables such as innate ability do not change since we follow the same person.

Let’s start with independently pooled cross section. There are quite a few examples. Among them, Current Population Survey and European Social Survey. By pooling the random samples over time, we increase the sample size giving our analysis more precise estimators and test statistics with more power. Before pooling samples obtained in different points in time, one must make sure that at least some of the relationships between the dependent and independent variables must remain constant over time. Sometimes, the pattern of coefficients on the year dummy variables is itself an interest. For example, one may ask: “After controlling for state demographic and economic factors, what is the pattern of loan discrimination between 1940 and 1980?” This could be answered by using multiple regression analysis with year dummy variables.

Let’s look at Example 13.1 in the textbook. In this example, we use General Social Survey for the given years to estimate a model explaining the total number of kids born to a woman. One could be interested in the fertility rate over time, after controlling for other observable factors.

library(wooldridge)

data(fertil1, package='wooldridge')

reg1=lm(kids~educ+age+I(age^2)+black+east+northcen+west

+farm+othrural+town+smcity+y74+y76+y78+y80+y82+y84, data=fertil1)

summary(reg1)##

## Call:

## lm(formula = kids ~ educ + age + I(age^2) + black + east + northcen +

## west + farm + othrural + town + smcity + y74 + y76 + y78 +

## y80 + y82 + y84, data = fertil1)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.9878 -1.0086 -0.0767 0.9331 4.6548

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -7.742457 3.051767 -2.537 0.011315 *

## educ -0.128427 0.018349 -6.999 4.44e-12 ***

## age 0.532135 0.138386 3.845 0.000127 ***

## I(age^2) -0.005804 0.001564 -3.710 0.000217 ***

## black 1.075658 0.173536 6.198 8.02e-10 ***

## east 0.217324 0.132788 1.637 0.101992

## northcen 0.363114 0.120897 3.004 0.002729 **

## west 0.197603 0.166913 1.184 0.236719

## farm -0.052557 0.147190 -0.357 0.721105

## othrural -0.162854 0.175442 -0.928 0.353481

## town 0.084353 0.124531 0.677 0.498314

## smcity 0.211879 0.160296 1.322 0.186507

## y74 0.268183 0.172716 1.553 0.120771

## y76 -0.097379 0.179046 -0.544 0.586633

## y78 -0.068666 0.181684 -0.378 0.705544

## y80 -0.071305 0.182771 -0.390 0.696511

## y82 -0.522484 0.172436 -3.030 0.002502 **

## y84 -0.545166 0.174516 -3.124 0.001831 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.555 on 1111 degrees of freedom

## Multiple R-squared: 0.1295, Adjusted R-squared: 0.1162

## F-statistic: 9.723 on 17 and 1111 DF, p-value: < 2.2e-16The coefficients on the year dummy show a drop in fertility rates in the early 80s. Other slopes are as predicted: educ is negatively related, age is positive but decreasing, etc. The model we estimated here assumes the effects to be constant over time. This may or may not be true. One should also check heteroskedasticity in the error term and may use heteroskedasticity-robust standard errors.

Let’s look at another example: changes in return to education and gender wage gap. \(\beta_7\) (in reg2a) measures the \(log(wage)\) differential. If nothing changed from 1978 to 1985 to the gender wage gap, we expect to see \(\beta_8\) (in reg2a) not significantly different from 0.

data(cps78_85, package='wooldridge')

reg2a = lm(lwage ~ y85 + educ + educ*y85 + exper + I(exper^2) + union + female + female*y85, data=cps78_85)

#You can similarly use a shorter notation as shown below.

reg2b = lm(lwage ~ y85*(educ+female) +exper+ I(exper^2) + union, data=cps78_85)

summary(reg2a)##

## Call:

## lm(formula = lwage ~ y85 + educ + educ * y85 + exper + I(exper^2) +

## union + female + female * y85, data = cps78_85)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.56098 -0.25828 0.00864 0.26571 2.11669

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.589e-01 9.345e-02 4.911 1.05e-06 ***

## y85 1.178e-01 1.238e-01 0.952 0.3415

## educ 7.472e-02 6.676e-03 11.192 < 2e-16 ***

## exper 2.958e-02 3.567e-03 8.293 3.27e-16 ***

## I(exper^2) -3.994e-04 7.754e-05 -5.151 3.08e-07 ***

## union 2.021e-01 3.029e-02 6.672 4.03e-11 ***

## female -3.167e-01 3.662e-02 -8.648 < 2e-16 ***

## y85:educ 1.846e-02 9.354e-03 1.974 0.0487 *

## y85:female 8.505e-02 5.131e-02 1.658 0.0977 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.4127 on 1075 degrees of freedom

## Multiple R-squared: 0.4262, Adjusted R-squared: 0.4219

## F-statistic: 99.8 on 8 and 1075 DF, p-value: < 2.2e-16Running the above equation yields the following result: the gender wage gap in 1975 is estimated to be around 31% (statistically significant at 5%). However, it seems to have fallen in the following years around 8.5 percentage points (also statistically significant at 5% level).

In the example above we interacted some variables with a time dummy variable to be estimate how the effects of a variable changed over time. Note that if you interact all variables with the time dummy, it is the same as running two separate equations, one for one sample in time period 1 and one in time period 2. See the code for each regression on each of these samples separately below.

data(cps78_85, package='wooldridge')

reg2c = lm(lwage ~ y85 + y85*(educ + exper + I(exper^2) + union + female), data=cps78_85)

summary(reg2c)##

## Call:

## lm(formula = lwage ~ y85 + y85 * (educ + exper + I(exper^2) +

## union + female), data = cps78_85)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.55317 -0.25818 0.00812 0.26686 2.14184

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.4582570 0.1021541 4.486 8.04e-06 ***

## y85 0.1219978 0.1509545 0.808 0.41917

## educ 0.0768148 0.0069137 11.110 < 2e-16 ***

## exper 0.0249177 0.0050303 4.954 8.46e-07 ***

## I(exper^2) -0.0002844 0.0001083 -2.625 0.00879 **

## union 0.2039824 0.0393500 5.184 2.60e-07 ***

## female -0.3155108 0.0367901 -8.576 < 2e-16 ***

## y85:educ 0.0139270 0.0101560 1.371 0.17057

## y85:exper 0.0095289 0.0071382 1.335 0.18219

## y85:I(exper^2) -0.0002399 0.0001553 -1.544 0.12277

## y85:union -0.0018095 0.0617285 -0.029 0.97662

## y85:female 0.0846136 0.0518164 1.633 0.10277

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.4128 on 1072 degrees of freedom

## Multiple R-squared: 0.4276, Adjusted R-squared: 0.4217

## F-statistic: 72.79 on 11 and 1072 DF, p-value: < 2.2e-16reg2d = lm(lwage ~ educ + exper + I(exper^2) + union + female, data=cps78_85[which(cps78_85$y85==0),])

reg2e = lm(lwage ~ educ + exper + I(exper^2) + union + female, data=cps78_85[which(cps78_85$y85==1),])

summary(reg2d)##

## Call:

## lm(formula = lwage ~ educ + exper + I(exper^2) + union + female,

## data = cps78_85[which(cps78_85$y85 == 0), ])

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.55317 -0.23310 0.01338 0.22015 1.29929

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.4582570 0.0957004 4.788 2.17e-06 ***

## educ 0.0768148 0.0064769 11.860 < 2e-16 ***

## exper 0.0249177 0.0047125 5.288 1.80e-07 ***

## I(exper^2) -0.0002844 0.0001015 -2.802 0.00526 **

## union 0.2039824 0.0368640 5.533 4.89e-08 ***

## female -0.3155108 0.0344658 -9.154 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.3867 on 544 degrees of freedom

## Multiple R-squared: 0.3833, Adjusted R-squared: 0.3776

## F-statistic: 67.61 on 5 and 544 DF, p-value: < 2.2e-16summary(reg2e)##

## Call:

## lm(formula = lwage ~ educ + exper + I(exper^2) + union + female,

## data = cps78_85[which(cps78_85$y85 == 1), ])

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.19388 -0.28944 -0.00682 0.29548 2.14184

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.5802548 0.1179364 4.920 1.16e-06 ***

## educ 0.0907418 0.0078944 11.494 < 2e-16 ***

## exper 0.0344467 0.0053743 6.409 3.24e-10 ***

## I(exper^2) -0.0005243 0.0001181 -4.438 1.11e-05 ***

## union 0.2021730 0.0504693 4.006 7.07e-05 ***

## female -0.2308973 0.0387206 -5.963 4.53e-09 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.438 on 528 degrees of freedom

## Multiple R-squared: 0.3175, Adjusted R-squared: 0.3111

## F-statistic: 49.13 on 5 and 528 DF, p-value: < 2.2e-16As you can see, for the year 1985 (reg2e), the intercept is 0.5802548 which is exactly the same as the intercept in the regression reg2c for the observations in the year 1985: 0.4582570+0.1219978.

Pooled cross sections can be useful in evaluating the effects of a certain event or policy. With data before and after the event (or policy), one is able to infer about the impact of that event.

Example 13.3

data(kielmc, package='wooldridge')

# Separate regressions for 1978 and 1981: report coeeficients only

coef( lm(rprice~nearinc, data=kielmc, subset=(year==1978)) )## (Intercept) nearinc

## 82517.23 -18824.37coef( lm(rprice~nearinc, data=kielmc, subset=(year==1981)) )## (Intercept) nearinc

## 101307.51 -30688.27# Joint regression including an interaction term

library(lmtest)

coeftest( lm(rprice~nearinc*y81, data=kielmc) )##

## t test of coefficients:

##

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 82517.2 2726.9 30.2603 < 2.2e-16 ***

## nearinc -18824.4 4875.3 -3.8612 0.0001368 ***

## y81 18790.3 4050.1 4.6395 5.117e-06 ***

## nearinc:y81 -11863.9 7456.6 -1.5911 0.1125948

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1RegA=lm((rprice)~nearinc*y81, data=kielmc)

RegB=lm((rprice)~nearinc*y81 + age +I(age^2), data=kielmc)

RegC=lm((rprice)~nearinc*y81+age+I(age^2)+log(intst)+log(land)+log(area)+rooms+baths, data=kielmc)

library(stargazer)

stargazer(RegA,RegB,RegC, type="text")##

## ============================================================================================

## Dependent variable:

## ------------------------------------------------------------------------

## (rprice)

## (1) (2) (3)

## --------------------------------------------------------------------------------------------

## nearinc -18,824.370*** 9,397.936* 10,941.230**

## (4,875.322) (4,812.222) (4,683.912)

##

## y81 18,790.290*** 21,321.040*** 16,270.670***

## (4,050.065) (3,443.631) (2,811.080)

##

## age -1,494.424*** -704.170***

## (131.860) (139.188)

##

## I(age2) 8.691*** 3.496***

## (0.848) (0.855)

##

## log(intst) -7,024.937**

## (3,107.732)

##

## log(land) 10,359.430***

## (2,415.652)

##

## log(area) 32,442.580***

## (5,078.362)

##

## rooms 2,842.505*

## (1,709.086)

##

## baths 7,092.305***

## (2,734.712)

##

## nearinc:y81 -11,863.900 -21,920.270*** -17,767.930***

## (7,456.646) (6,359.745) (5,126.169)

##

## Constant 82,517.230*** 89,116.540*** -238,591.100***

## (2,726.910) (2,406.051) (41,020.560)

##

## --------------------------------------------------------------------------------------------

## Observations 321 321 321

## R2 0.174 0.414 0.643

## Adjusted R2 0.166 0.405 0.632

## Residual Std. Error 30,242.900 (df = 317) 25,543.290 (df = 315) 20,102.690 (df = 310)

## F Statistic 22.251*** (df = 3; 317) 44.591*** (df = 5; 315) 55.854*** (df = 10; 310)

## ============================================================================================

## Note: *p<0.1; **p<0.05; ***p<0.01#Using log(price)

summary( lm(log(price)~nearinc*y81, data=kielmc) )##

## Call:

## lm(formula = log(price) ~ nearinc * y81, data = kielmc)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.11957 -0.20328 0.02226 0.18909 1.66604

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.28542 0.03051 369.839 < 2e-16 ***

## nearinc -0.33992 0.05456 -6.231 1.48e-09 ***

## y81 0.45700 0.04532 10.084 < 2e-16 ***

## nearinc:y81 -0.06265 0.08344 -0.751 0.453

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.3384 on 317 degrees of freedom

## Multiple R-squared: 0.4091, Adjusted R-squared: 0.4035

## F-statistic: 73.15 on 3 and 317 DF, p-value: < 2.2e-16In economics and other social sciences, a natural experiment (quasi-experiment) occurs when some exogenous event (for example, a change in government policy) changes the environment in which individuals, firms, cities operate. There is a control group which is not affected by the policy change and the treatment group which is thought to be affected by the policy change. Note that in a true experiment, the two groups are randomly chosen. In natural experiments, the assignment of treatment to individuals or cities is not random thus we need to control for differences between the two groups. To control for systemic differences between the control and treatment groups in a natural experiment, we need at least two years of data, one before and one after the policy change. Thus, we can break down our sample into four groups: (1) control group before the policy change, (2) control group after the policy change, (3) treatment group before the policy change, (4) treatment group after the policy change.

We can specify the following equation: \[ y=\beta_0+\delta_0(after)+\beta_1(treated)+\delta_1(after)(treated)+(other\_factors) \] where \(treated\) equals 1 if the observed unit is in the treatment group, and \(after\) equals 1 for all observations in the second time period. Here \(\hat \delta_1\) compares the outcomes of two groups before and after the policy change. \[ \hat \delta_1=(\bar y_{1,treated}-\bar y_{1,control})-(\bar y_{0,treated}-\bar y_{0,control}) \]

In the above equation, our interest lies in \(\delta_1\). We call delta1 the difference-in-difference estimator. In essence it shows the difference between the change treatment group and the change in the control group. Compare the difference in outcomes of the units that are affected by the policy change (= treatment group) and those who are not affected (= control group) before and after the policy was enacted. If the change in the treatment group was found to be significantly larger than the change in the control group and no other external factors changed across the two time periods, we have found a good estimator of the causal impact of the program.

For example, the level of unemployment benefits is cut but only for group A (= treatment group). Group A normally has longer unemployment duration than group B (= control group). If the difference in unemployment duration between group A and group B becomes smaller after the reform, reducing unemployment benefits reduces unemployment duration for those affected.

Keep in mind that difference-in-difference only works if the difference in outcomes between the two groups is not changed by other factors than the policy change (e.g. there must be no differential trends).

Example 13.4

data(injury, package='wooldridge')

reg4=lm(log(durat)~afchnge + highearn + afchnge*highearn, data=injury)

summary(reg4)##

## Call:

## lm(formula = log(durat) ~ afchnge + highearn + afchnge * highearn,

## data = injury)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.0128 -0.7214 -0.0171 0.7714 4.0047

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.19934 0.02711 44.241 < 2e-16 ***

## afchnge 0.02364 0.03970 0.595 0.55164

## highearn 0.21520 0.04336 4.963 7.11e-07 ***

## afchnge:highearn 0.18835 0.06279 2.999 0.00271 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.298 on 7146 degrees of freedom

## Multiple R-squared: 0.01584, Adjusted R-squared: 0.01543

## F-statistic: 38.34 on 3 and 7146 DF, p-value: < 2.2e-16It is often a good idea to expand a simple DD (difference-in-difference) methodology by obtaining multiple treatment and control groups as well as more time periods. We can easily create a general framework for policy analysis by allowing a general pattern of interventions, where some units are never treated, others get treated at various time periods.

Now suppose you have panel data instead of pooled cross section. This means that you have data on the same individuals/firms/cities over a few periods of time.

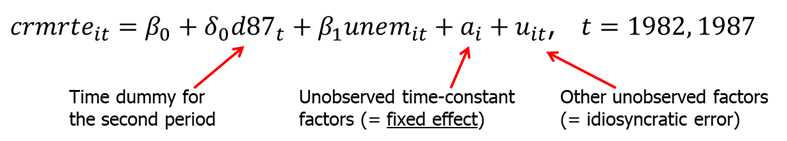

Example. Assume your job is to find the effect of unemployment on city crime rate. Assume that no other explanatory variables are available except crime rate and unemployment. Will it be possible to estimate the causal effect of unemployment on crime? Let \(i\) denote the unit (city) and \(t\) denote time period. We can now write an example fixed effects model explaining the crime rate:

The dummy variable \(d87_t\) equals 1 for the year 1987 and 0 for the year 1982. Variable \(a_i\) is referred to as a fixed effect (since it is fixed -> no \(t\) subscript). In this case, it represents the city unobserved effect, such as geographical features, demographic features (assuming they do not change over short period of time) and so on. How crime rates are reported may also be in \(a_i\). The error \(u_{it}\) is often called the idiosyncratic error or time varying error because it represents factors that change over time.

If we simply pool the data and run an OLS regression, we will run into a big problem. Since \(a_i\) will be in the error term, and we know that those factors in \(a_i\) are most likely correlated with unemployment, we will get biased estimators. The resulting bias in pooled OLS is sometimes called heterogeneity bias but really it is simply a bias due to omitting a time-constant variable.

To run a pooled OLS, use the following:

data(crime2, package='wooldridge')

reg5=lm(crmrte~d87+unem, data=crime2)

summary(reg5)##

## Call:

## lm(formula = crmrte ~ d87 + unem, data = crime2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -53.474 -21.794 -6.266 18.297 75.113

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 93.4202 12.7395 7.333 9.92e-11 ***

## d87 7.9404 7.9753 0.996 0.322

## unem 0.4265 1.1883 0.359 0.720

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 29.99 on 89 degrees of freedom

## Multiple R-squared: 0.01221, Adjusted R-squared: -0.009986

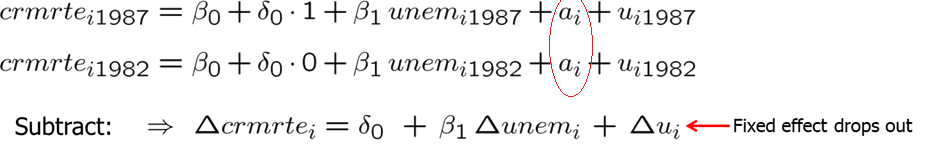

## F-statistic: 0.5501 on 2 and 89 DF, p-value: 0.5788We find that the resulting sign on the estimate of unemp is in the right direction but the significance is very low. Using pooled OLS does not solve omitted variable problem. Thus, we should do something more sophisticated. In the crime equation, we want to allow the unmeasured city factors in \(a_i\) that affect the crime rate also to be correlated with the unemployment rate. Since a_i does not change over time, we can difference the data across two years. This would look as follows:

The resulting equation is called the first-differenced equation. It is just like a cross-sectional equation before but each variables is differenced over time. The unobserved effect, \(a_i\), does not appear in the equation because it has been differenced away. The intercept in the final equation is also constant over time (what we wanted). Before estimating, we should ask if the OLS assumptions are satisfied, mainly is the exogeneity assumption satisfied or is the change in u uncorrelated with the change in unemployment. For example, suppose that law enforcement effort (which is in \(u\)) increases more in cities where unemployment rate decreases. This would violate the exogeneity assumption because change in u would be negatively correlated with change in unemployment. Including more factors into the regression equation, allows us to partly overcome this problem. One also must have some variation in independent variables.

We can now estimate our model using the following code:

data(crime2, package='wooldridge')

reg6=lm(ccrmrte~cunem, data=crime2)

summary(reg6)##

## Call:

## lm(formula = ccrmrte ~ cunem, data = crime2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -36.912 -13.369 -5.507 12.446 52.915

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 15.4022 4.7021 3.276 0.00206 **

## cunem 2.2180 0.8779 2.527 0.01519 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 20.05 on 44 degrees of freedom

## (46 observations deleted due to missingness)

## Multiple R-squared: 0.1267, Adjusted R-squared: 0.1069

## F-statistic: 6.384 on 1 and 44 DF, p-value: 0.01519The above results point to a positive statistically significant relationship between unemployment and crime rate. It shows that elimination of the time-constant effects (by differencing) was very important. Also, if we look at the intercept, we see that even if the change in unemployment is zero, we predict an increase in the crime rate. We call this a secular upward trend in crime rates in that period.

Example 13.5

data(slp75_81,package='wooldridge')

attach(slp75_81)

regE5=lm(cslpnap~+ctotwrk+ceduc+cmarr+cyngkid+cgdhlth)

summary(regE5)##

## Call:

## lm(formula = cslpnap ~ +ctotwrk + ceduc + cmarr + cyngkid + cgdhlth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2454.2 -307.2 79.8 334.4 2037.9

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -92.63404 45.86590 -2.020 0.0446 *

## ctotwrk -0.22667 0.03605 -6.287 1.58e-09 ***

## ceduc -0.02447 48.75938 -0.001 0.9996

## cmarr 104.21395 92.85536 1.122 0.2629

## cyngkid 94.66540 87.65252 1.080 0.2813

## cgdhlth 87.57785 76.59913 1.143 0.2541

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 598.6 on 233 degrees of freedom

## Multiple R-squared: 0.1495, Adjusted R-squared: 0.1313

## F-statistic: 8.191 on 5 and 233 DF, p-value: 3.827e-07Example 13.6

data(crime3,package='wooldridge')

attach(crime3)

regE6=lm(clcrime~d78+clrprc1+clrprc2)

summary(regE6)##

## Call:

## lm(formula = clcrime ~ d78 + clrprc1 + clrprc2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.71931 -0.28433 0.04374 0.18731 1.01998

##

## Coefficients: (1 not defined because of singularities)

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.6268644 0.1861254 3.368 0.00146 **

## d78 NA NA NA NA

## clrprc1 0.0007601 0.0056674 0.134 0.89385

## clrprc2 -0.0114357 0.0058377 -1.959 0.05571 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.3625 on 50 degrees of freedom

## (53 observations deleted due to missingness)

## Multiple R-squared: 0.1074, Adjusted R-squared: 0.07165

## F-statistic: 3.007 on 2 and 50 DF, p-value: 0.05848When dealing with panel data, a researcher should carefully store the data so that different units and time periods are easily linked. One way to do it is to have for each unit two entries in the data set: one for time period 1, another for time period 2. Then the second unit follows with an entry for each time period. This easily allows to use the data both for panel study and for pooled cross sectional analysis.

| Unit | Time Period | Wage | Married | Child |

|---|---|---|---|---|

| 1 | 1 | … | … | … |

| 1 | 2 | … | … | … |

| 2 | 1 | … | … | … |

| 2 | 2 | … | … | … |

| 3 | 1 | … | … | … |

| 3 | 2 | … | … | … |

Another approach is to store the data with separate columns for each observed independent variable values in each time period creating only one entry for each unit.

| Unit | Wage_1 | Wage_2 | Married_1 | Married_2 | Child_1 | Child_2 |

|---|---|---|---|---|---|---|

| 1 | … | … | … | … | … | … |

| 2 | … | … | … | … | … | … |

| 3 | … | … | … | … | … | … |

| 4 | … | … | … | … | … | … |

| 5 | … | … | … | … | … | … |

| 6 | … | … | … | … | … | … |

Panel data sets are very useful for policy analysis, in particular, program evaluation. A sample of individuals, firms, cities, is obtained in the first period. Some of these take part in the program (treatment group) and others do not (control group).

For example, let’s assume we are assigned to evaluate the effect of Michigan job training program on worker productivity of manufacturing firms in 1987 and 1988. \[ scrap_{it}=\beta_0 + \delta_0*y88_t + \beta_1*grant_{it} + a_i + u_{it}, \quad t=(1,2) \text{ or } (1987,1988) \] \(scrap_{it}\) is the scrap rate of the firm i in period \(t\), \(grant_{it}\) is the dummy variable equal one if firm \(i\) in period \(t\) received the job training grant, \(y88_t\) is the dummy variable for observations in 1988, \(a_i\) is the unobserved firm effect that is time invariant.

The problem lies in the fact that \(a_i\) may be systemically related to whether a firm gets a grant. Since grants are typically not assigned randomly some kind of firms may be preferred to others. To eliminate this problem and producing biased and inconsistent estimators, we can use first differencing. This yields the following: \[ \Delta scrap_i = \delta_0 + \beta_1*\Delta grant_{i} + \Delta u_{i} \]

We will simply regress the change in scrap rate on the change in grant indicator. We have removed the unobserved firm characteristics from the regression eliminating the main problem. We can also use log(scrap_it) for the dependent variable to estimate the percentage effect. After differencing, the model is: \[ \Delta \log (scrap_i) = \delta_0 + \beta_1*\Delta grant_{i} + \Delta u_{i} \]

Estimating the above two equations yields the following results. Check out the R code to replicate the analysis.

Example 13.7

data(traffic1,package='wooldridge')

attach(traffic1)

regE7=lm(cdthrte~copen+cadmn)

summary(regE7)##

## Call:

## lm(formula = cdthrte ~ copen + cadmn)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.25261 -0.14337 -0.00321 0.19679 0.79679

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -0.49679 0.05243 -9.476 1.43e-12 ***

## copen -0.41968 0.20559 -2.041 0.0467 *

## cadmn -0.15060 0.11682 -1.289 0.2035

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.3435 on 48 degrees of freedom

## Multiple R-squared: 0.1187, Adjusted R-squared: 0.08194

## F-statistic: 3.231 on 2 and 48 DF, p-value: 0.04824As we use differencing with two time periods, we can use the same technique with more than two periods. For example, you obtain 10 individuals’ data (N=10) in 3 different time periods (T=3). The general unobserved effects model is: \[ y_{it} = \delta_1 + \delta2*d2_t + \delta3*d3_t + \beta_1*x_{it1} + \beta_2*x_{it2} + ... + \beta_k*x_{itk} + a_i + u_{it} \]

The total number of observations is N*T because each individual is observed T times. The key assumption is that the idiosyncratic errors are uncorrelated with the explanatory variable in each time period: \[ Cov(x_{itj}, u_{is}) = 0 \quad \text{ for all, t,s, and j} \] Omitted variables or measurement bias are very likely to violate this assumption.

As previously, we can eliminate the \(a_i\) by differencing adjacent periods. In the three period case, we subtract time period one from time period two, and next we subtract time period two from time period three. This gives us two observed changes (for t = 2 and 3): \[ \Delta y_{it} = \delta_2*d2_t + \delta3*\Delta d3_t + \beta_1*\Delta x_{it1} + \beta_2*\Delta x_{it2} + ... +\ \beta_k*\Delta x_{itk} + \Delta u_{it} \]

We do not have a differenced equation for t=1 because there is no observation in the year prior to t=1. The above differenced equation represents two time periods for each individual in the sample. If the equation satisfies the classical linear model assumptions, then pooled OLS gives unbiased estimators, and the usual t and F statistics are valid of hypotheses testing. The important requirement for OLS is that \(\Delta u_{it}\) is uncorrelated with \(\Delta x_{itj}\) for all j and t = 2 and 3.

As you can notice, in the differenced equation above the intercept is not present (as it was differenced away). For the purpose of computing the R-squared, we can use a simple transformation by reworking the time dummies to obtain the following equation: \[ \Delta y_{it} = \alpha_0 + \alpha_1*d3_t + \beta_1*\Delta x_{it1} + \beta_2*\Delta x_{it2} + ... + \beta_k*\Delta x_{itk} + \Delta u_{it} \]

If we have the same T time periods for each of N cross-sectional units, we say that the data set is a balanced panel: we have the same time periods for all individuals, firms or cities.

If the researcher detects serial correlation (\(\Delta u_{i,t}\) and \(\Delta u_{i,t-1}\) have a specific relationship), it is possible to adjust the standard errors to allow for unrestricted forms of serial correlation and heteroskedasticity. If there is no serial correlation in the errors, the usual methods for dealing with heteroskedasticity are valid. We can use Breusch-Pagan and White tests for heteroskedasticity and simply use the usual heteroskedasticity-robust standard errors.

Often, we are interested in slope coefficients have changed over time. We can easily carry out such tests by interacting the explanatory variables of interest with time-period dummies. Suppose you observe 3 years of data on a random sample of workers in 2000, 2002 and 2004, and specify the following model: \[ log(wage_{it}) = \beta_0 + \delta_1*d02_t + \delta_2*d04_t + \beta_1*female_i + \gamma_1*d02_t*female_i + \gamma_2*d04_t*female_i + ... + a_i + u_{it} \]

In this case, first differencing will eliminate the intercept for the year 2000 (\(\beta_0\)), and the gender wage gap for 2000 (\(\beta_1*female_i\)). However, the change in \(d02_t*female_i\) is \(\Delta (d02_t)*female_i\) does not disappear thus we are able to estimate how the wage gap has changed in 2002 and, similarly, 2004 relative to 2000.

Example 13.9

library(plm);library(lmtest)

data(crime4, package='wooldridge')

crime4.p <- pdata.frame(crime4, index=c("county","year") )

pdim(crime4.p)## Balanced Panel: n = 90, T = 7, N = 630# manually calculate first differences of crime rate:

crime4.p$dcrmrte <- diff(crime4.p$crmrte)

# Display selected variables for observations 1-9:

crime4.p[1:9, c("county","year","crmrte","dcrmrte")]## county year crmrte dcrmrte

## 1-81 1 81 0.0398849 NA

## 1-82 1 82 0.0383449 -0.0015399978

## 1-83 1 83 0.0303048 -0.0080401003

## 1-84 1 84 0.0347259 0.0044211000

## 1-85 1 85 0.0365730 0.0018470995

## 1-86 1 86 0.0347524 -0.0018206015

## 1-87 1 87 0.0356036 0.0008512028

## 3-81 3 81 0.0163921 NA

## 3-82 3 82 0.0190651 0.0026730001# Estimate FD model:

coeftest(plm(log(crmrte)~d83+d84+d85+d86+d87+lprbarr+lprbconv+lprbpris+lavgsen

+lpolpc,data=crime4.p, model="fd") )##

## t test of coefficients:

##

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.0077134 0.0170579 0.4522 0.6513193

## d83 -0.0998658 0.0238953 -4.1793 3.421e-05 ***

## d84 -0.1478033 0.0412794 -3.5806 0.0003744 ***

## d85 -0.1524144 0.0584000 -2.6098 0.0093152 **

## d86 -0.1249001 0.0760042 -1.6433 0.1009087

## d87 -0.0840734 0.0940003 -0.8944 0.3715175

## lprbarr -0.3274942 0.0299801 -10.9237 < 2.2e-16 ***

## lprbconv -0.2381066 0.0182341 -13.0583 < 2.2e-16 ***

## lprbpris -0.1650463 0.0259690 -6.3555 4.488e-10 ***

## lavgsen -0.0217606 0.0220909 -0.9850 0.3250506

## lpolpc 0.3984264 0.0268820 14.8213 < 2.2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We have discussed that differencing allows us to eliminate the constant unobserved effects which is extremely useful in policy analysis. However, there are a few things we need to keep in mind: (1) we need sufficient variation in the explanatory variables over time, (2) first-differenced estimation can also be subject to serious biases (due to failure of strict exogeneity assumption), (3) having measurement error in explanatory variables may result in potentially sizable bias.

Homework Problems

Problem 5.

Suppose that we want to estimate the effect of several variables on annual saving and that we have a panel data set on individuals collected on January 31, 1990, and January 31, 1992. If we include a year dummy for 1992 and use first differencing, can we also include age in the original model? Explain.

Computer Exercise C1.

Use data set fertil1 from package wooldridge for this exercise.

1. In the equation estimated in Example 13.1, test whether living environment at age 16 has an effect on fertility. (The base group is large city.) Report the value of the F statistic and the p-value.

2. Test whether region of the country at age 16 (South is the base group) has an effect on fertility.

3. Let \(u\) be the error term in the population equation. Suppose you think that the variance of \(u\) changes over time (but not with educ, age, and so on). A model that captures this is \(u^2 = \gamma_0 + \gamma_1*y74 + \gamma_2*y76 + ... + \gamma_6*y84 + v\). Using this model, test for heteroskedasticity in \(u\). (Hint: Your F test should have 6 and 1,122 degrees of freedom.)

4. Add the interaction terms \(y74*educ, y76*educ, ..., y84*educ\) to the model estimated in Table 13.1. Explain what these terms represent. Are they jointly significant?

Computer Exercise C3.

Use data set kielmc from package wooldridge for this exercise.

1. The variable \(dist\) is the distance from each home to the incinerator site, in feet. Consider the model: \(log(price) = b_0 + d_0*y81 + b_1*log(dist) + d_1*y81*log(dist) + u.\) If building the incinerator reduces the value of homes closer to the site, what is the sign of \(d_1\)? What does it mean if \(b_1 > 0\)?

2. Estimate the model from part (1) and report the results in the usual form. Interpret the coefficient on \(y81*log(dist)\). What do you conclude?

3. Add \(age\), \(age^2\), \(rooms\), \(baths\), \(log(intst)\), \(log(land)\), and \(log(area)\) to the equation. Now, what do you conclude about the effect of the incinerator on housing values?

4. Why is the coefficient on \(log(dist)\) positive and statistically significant in part (2) but not in part (3)? What does this say about the controls used in part (3)?

Computer Exercise C5.

Use data set rental from package wooldridge for this exercise. The data for the years 1980 and 1990 include rental prices and other variables for college towns. The idea is to see whether a stronger presence of students affects rental rates. The unobserved effects model is \(log(rent_{it}) = b0 + d0*y90_t + b1*log(pop_{it}) + b2*log(avginc_{it}) + b3*pctstu_{it} + a_i + u_{it}\), where \(pop\) is city population, \(avginc\) is average income, and \(pctstu\) is student population as a percentage of city population (during the school year).

1. Estimate the equation by pooled OLS and report the results in standard form. What do you make of the estimate on the 1990 dummy variable? What do you get for \(b_3\)?

2. Are the standard errors you report in part (1) valid? Explain.

3. Now, difference the equation and estimate by OLS. Compare your estimate of \(b_3\) with that from part (2). Does the relative size of the student population appear to affect rental prices?

4. Obtain the heteroskedasticity-robust standard errors for the first-differenced equation in part (3). Does this change your conclusions?

References

Wooldridge, J. (2019). Introductory econometrics: a modern approach. Boston, MA: Cengage.

Heiss, F. (2016). Using R for introductory econometrics. Düsseldorf: Florian Heiss, CreateSpace.